AI Governance & Responsible AI: Turning Risk into Strategic Advantage

Explore how AI Governance & Responsible AI is reshaping enterprise adoption with hard data, market forecasts, and practical frameworks. Learn why only a fraction of organizations are truly governing AI — and how to fix that with real world examples, tools, and vendor guidance.

Introduction

Every enterprise on the planet is trying to unlock value from AI right now — but only a small subset are actually doing it responsibly. AI is no longer just an innovative technology: it’s strategic infrastructure. And just like any critical infrastructure, it needs governance, oversight, accountability, and risk controls.

Yet the numbers tell a dramatic story: many organizations lack basic governance policies, haven’t assigned clear ownership, and remain unprepared for the regulatory and ethical challenges that are already landing at their feet. This disconnect between adoption and responsibility is exactly why AI Governance & Responsible AI is now a top board-level concern.

In this article, we’ll use actual industry data, market trends, regulatory context, and practical examples to explain how organizations are governing — or failing to govern — AI today.

The pattern is clear: AI models may work-but organizations often aren’t ready to run them in real operating conditions.

What Is AI Governance & Responsible AI?

At its core, AI Governance & Responsible AI means building structures, policies, and practices to ensure AI systems are ethical, safe, compliant, fair, and aligned with both business goals and societal values.

It typically includes:

- Governance frameworks for accountability and oversight

- Ethical principles for fairness and bias mitigation

- Technical practices for transparency, explainability, and monitoring

- Regulatory readiness for evolving legal requirements

This blend ensures AI is not just powerful, but also trustworthy.

How Much AI Governance Adoption Is Happening (and How Much Isn’t)?

Organizations that succeed treat AI adoption as enterprise change, not a technology rollout. Building AI-Ready Organizations (Not Just AI Solutions without governance or compliance)

Here’s where the data gets sobering.

📊 Governance Programs Are Rare

- Only ~25% of organizations have fully implemented AI governance programs, despite broad awareness of risk. (Knostic)

- Even though many organizations talk about governance, only 43% have any governance policy in place at all — meaning a surprising 29% have none. (aidataanalytics.network)

- Half of leaders in another recent survey feel overwhelmed by AI regulations, showing that awareness doesn’t always translate into action. (Vanta)

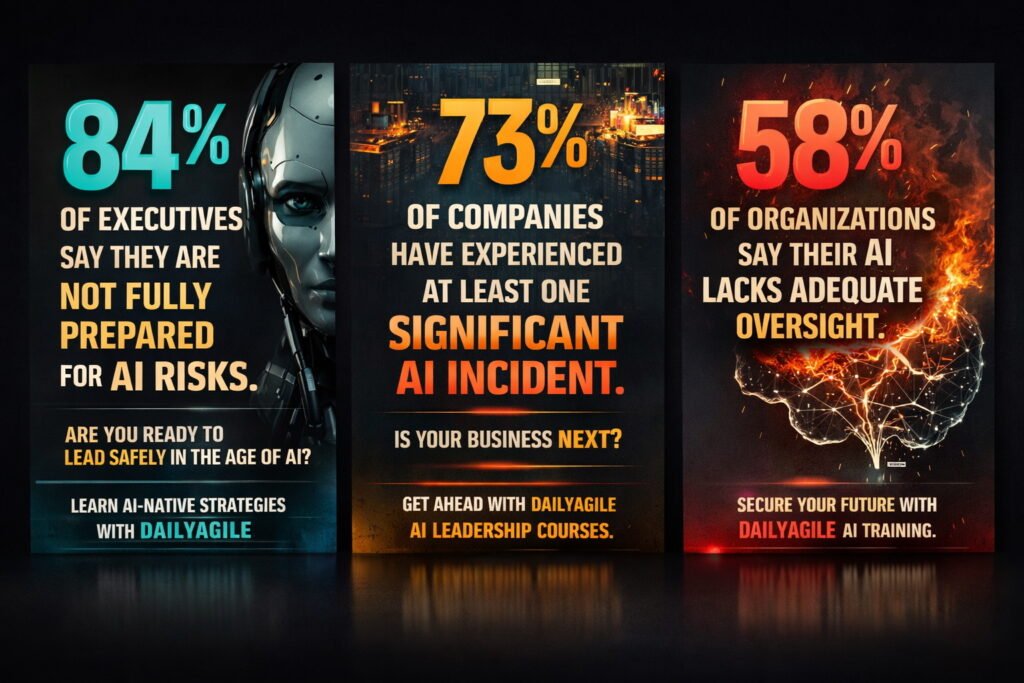

📊 Risk Controls and Preparedness Are Low

According to an AI risk report:

- 93.2% of organizations lack confidence in securing AI-related data. (BigID)

- 80.2% feel unprepared for AI regulatory compliance. (BigID)

- Only 6.4% have advanced AI security strategies. (BigID)

These stats illustrate a major disconnect: most enterprises are adopting AI without adequate governance controls in place.

This gap is exactly where DailyAgile focuses its work.

DailyAgile’s AI-NATIVE Foundations and AI-NATIVE Change Agent certifications are designed to help organizations:

- Why 62% of AI Pilots fail or does not provide much value? Read more on why 62% of AI pilots fail blog.

- Develop AI-native decision-making and leadership skills

- Integrate AI responsibly into enterprise operating models

- Lead change across teams, portfolios, and business units

- Move AI initiatives confidently from pilot to production

These in-person programs build organizational readiness, not tool dependency.

Building AI-Ready Organizations: Capability Over AI Hype

In-Person, Enterprise-Scale Change Requires Human Alignment

DailyAgile’s AI-Native Foundations and AI-Native Change Agent certifications are designed specifically for this reality:

- Not tool training

- Not hype cycles

- But behavioral, organizational, and leadership readiness for AI-enabled enterprises. Check out our Virtual five-week AI foundations to breakthrough certified by Penn State University.

Delivered in-person at Penn State Great Valley, Malvern, PA campus or at your location, these programs focus on:

- Decision accountability in AI-augmented environments

- Organizational change patterns created by AI

- Scaling agility with AI, not around it

- Preparing leaders to govern AI responsibly

Here’s a practical approach to move from awareness to action:

Getting Started: A Step-by-Step Guide

- Map current AI use and create an inventory

- Classify each use by risk and impact

- Establish governance policies and oversight committees

- Deploy monitoring and explainability tooling

- Train teams on Responsible AI principles

- Iterate and improve continuously

Governance is not a one-and-done project — it’s an ongoing operational discipline.

Frequently Asked Questions (FAQs)

Q: Is AI governance required for every company using AI?

Yes — whether you’re a startup or an enterprise, governance protects against unfair, non-compliant, or risky decisions.

Q: Does responsible AI slow innovation?

No — proper governance enables faster, safer scaling of AI deployments.

Q: Who should lead AI governance?

Cross-functional leadership with representation from legal, security, risk, and business units.

Q: Are there standards for AI governance?

Frameworks such as the EU AI Act and standards like ISO/IEC 42001 help define responsible practices.

Conclusion

AI governance isn’t an academic buzzword — it’s a practical business imperative. The data makes it clear: while AI adoption has reached near-mainstream levels, governance adoption is still nascent and uneven. But organizations that build responsible, transparent, and governed AI systems are already seeing advantages in ROI, compliance readiness, and customer trust. In 2026 and beyond, governance will no longer be a differentiator — it will be a core enabler of sustainable AI success.

Why wait? Sign up today and master the art of AI leadership for lasting success. We can customized AI for your Industries such as AI in HR, AI in Health Care, AI in Supply Chain, AI in Manufacturing.